[*even when they’re labeled “course goals”]

I’ve been puzzling over the course goals etc etc for a new grad seminar in pedagogy I’m teaching this spring, and I think I finally pinpointed the single most frustrating aspect of the language of assessment for me, especially when it’s used to guide, direct, or evaluate instruction. It’s the reversal of priorities it seems to entail, when assessment drives pedagogical decision-making instead of the other way around.

Assessment, if it’s viewed as something that manages or directs pedagogy, threatens to take faculty away from all the stuff that we love and value in teaching (e.g., literature, disciplinary research, students, discussions, interactions etc) towards stuff that we may never love, or only barely value (e.g., quantification, social science notions of data and evidence, standardized teaching methodologies, bureaucratic protocols of compliance).

Yet even with these caveats, I still believe that these kinds of assessment exercises have the potential to improve our instruction, so long as they’re conceived as another form of feedback for faculty to use in the creation and revision of our courses. And I think that any course about pedagogy nowadays needs to introduce future teachers to the complex relation of assessment to one’s classroom practice.

Some of the best, most lucid discussions of these issues can be found in Erickson et al.’s book, Teaching First-Year College Students, which is designed to help instructors of first-year students understand the sheer difficulty and significance of this transition for students. But the book is comprehensive enough to help new teachers at any level understand the challenges of teaching and learning in contemporary universities.

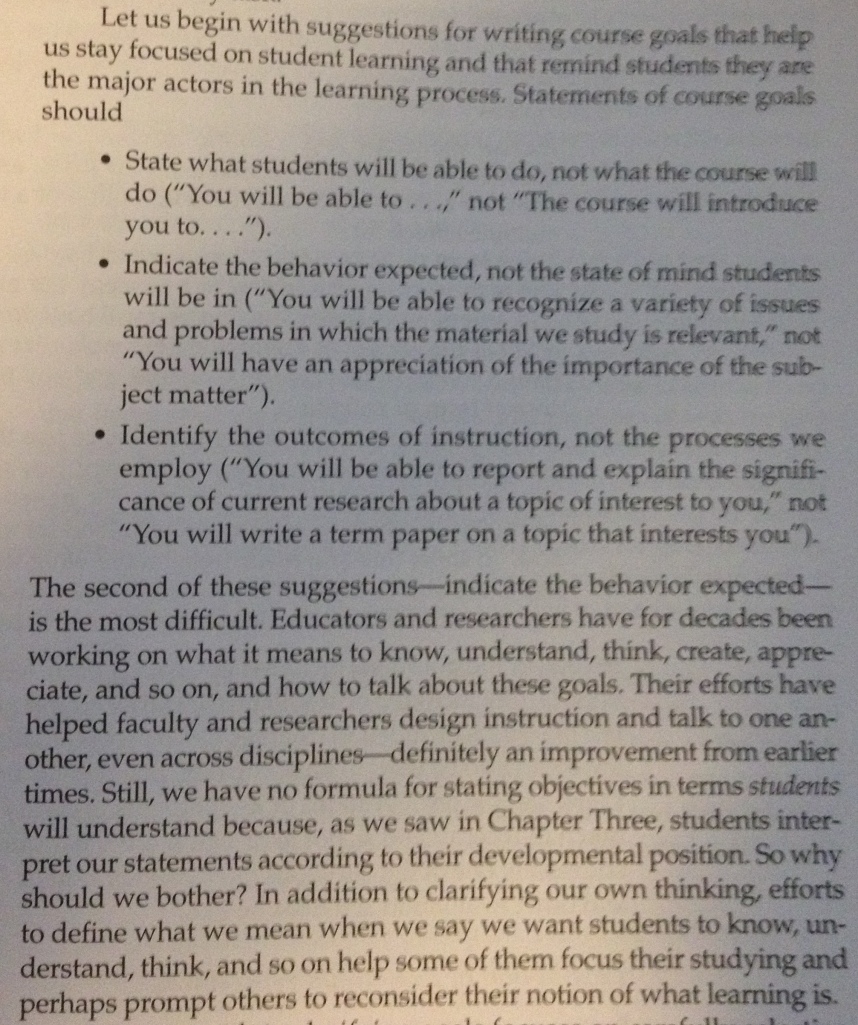

So here’s the paragraph I was using to think about my own learning objectives/course goals, in Erickson, et al., p. 71:

The process of drawing up these course goals begins by moving the focus away from the person teaching the course to the students taking the course. In other words, we move from “the course will do X” to “students will be able to do Y”) This is a difficult but useful shift in perspective that I think most teachers would endorse.

What is truly counter-intuitive is the major shift identified in the quote: “indicate the behavior expected, not the state of mind students will be in” [emphasis mine]. In other words, what outward behavior or activities manifested by students would provide visible, or even measurable, evidence that students are indeed “knowing, understanding, or thinking” the content of your course? What kinds of evidence can you provide that would corroborate your intuition that student A knew, understand, or thought better than student B?

I believe that experienced teachers intuitively regard “student thinking” as something that they are able to engage with, understand, assess, or try to improve, even if our intuitions and experience can be shown to be fallible.

Redirecting teachers’ attention strictly to student behavior, however, takes us away from our perceptions of students’ thinking, and often forces our attention on the lowest-level tasks and students’ demonstrated acts of compliance, which are of course the easiest parts of student activity to measure. The extent to which we demand that students “know, understand, or think” seem to vanish from this minimalist depiction of learning. And higher ed teachers are particularly baffled by this kind of goal displacement, when discussions of “critical thinking” or “higher-level learning” ignore disciplinary “ways of thinking” that remain tacit or opaque to outsiders.

Unless really ingenious methods of indirect observations are put into effect, the minimal, behaviorist picture of learning is where most of assessments of the learner and learning remain. They essentially inform us of the number of students attending classes and the number of hours they filled seats and drew upon “resources,” meaning instructor time and possibly attention. In some sense, the “competency movement” represents the instructional model that this kind of assessment and its advocates would move towards, but there are real questions about whether it can be done credibly enough to compete with more traditional educational approaches. But the biggest difficulty for all these externally-focused programs of assessment is that they are uninterested in the quality of those interactions or learning that would define an experience as “education” in our usual sense of the term. There is nothing transformative, or potentially transformative, in these kinds of experiences.

The most infuriating aspect of this situation, however, is when this behaviorist language of assessment, once it has rendered most higher-level work invisible, demands that something called “critical thinking” or “upper-level learning” be taught and assessed using the methods that are least suited to generating or observing them. In this scenario, true evaluations of success or failure are essentially irrelevant to the system getting built, because it is outside the control of the student or teacher to alter.

Having said all this, I agree with Erickson that an integration of pedagogy with assessment (via strategies like provisional, instructor-written course goals) remains a worthwhile activity, because it helps clarify to ourselves and our students what we’re attempting to do. In other words, this integration of pedagogy of assessment should be done to the extent that it improves our teaching or our students’ experiences of learning. And anything beyond that feels like a displacement of our genuine goals and values regarding teaching.

DM

The more experience I have with assessment, the more I am coming to believe that there is a major division between liberal arts and professional schools, and that one challenge is that most guidelines for assessment (and I would guess most university implementations) don’t make this distinction. To be clear: liberal arts include science and math; professional schools include business schools and schools of education, library science, etc. I realize that there is a lot of overlap, and that professional schools can teach more abstract matters and social problems, but they also tend to be organized around training students to become some sort of professional. This professionalism lends itself more readily to being assessed through a set of behaviors. Certainly a liberal arts education can lead to a profession, but the student outcome is not a set of professionalized behaviors. This makes all liberal arts outcome goals (not just humanities ones) really hard to assess using the behavior model, because what we really want is for students to come to new levels of understanding and open themselves up to new ways of thinking.. Which does not mean we should throw up our hands and say that it can’t be done. But it does mean, I think, that our assessment practices are always going to be more speculative and look messier. I do think something like “thinking like a literary critic” or “thinking like an historian” is on the right track, although I think assessment for liberal arts programs remains a problem that still needs more work and thought.

Oh, absolutely. Whether we call it professionalization or vocationalization of higher ed, this trend reframes our view of the liberal arts as containing all sorts of arcane, valueless stuff that can’t be named. The irony is that a lot of different research suggests that the more vocational the education, the less likely that higher-level learning, including things like critical thinking, will be an expectation. Roksa/Arum’s Academically Adrift suggests this, as does Saroyan and Amundsen’s Rethinking Teaching in Higher Education: see for example, “we have found that students whose goals are vocationally oriented are less likely to pursue goals of thinking and evaluating (Donald and Dubuc 1999)” (60). So I have no doubt that a functionalist, reductive view of education is better and more easily served by this kind of behaviorist description, than anything resembling the liberal arts.

For me, the question is whether despite all this there are potential benefits to folks in the humanities or the liberal arts: “thinking like a historian or a literary critic” is probably very hard to pin down without at least some “behaviorist” description, but it’s a very difficult act of translation, and most of those involved in these processes are not particularly concerned about how well they capture disciplinary thinking and practices.

I agree that it’s really hard to pin down such outcomes, but I wonder if the way to do this, if possible, would involve separating out liberal arts learning from the way learning takes places in professional schools. A second question might be: is it possible for liberal arts programs to identify their own outcomes and assessment processes and style for thinking about whether or not students are learning even if they share a campus with professional schools? Does the presence of professional schools necessarily lead to the vocationalization, as you put it, of the entire institution? I am not denying that such vocationalization exists (or claiming that liberal arts majors should not think about employment!), but I think that it should be possible, at least in theory, to allow professional schools to have their professional outcomes and liberal arts programs to have their liberal ones, and they will look rather different. OK, very different. And there will be very different ways of figuring out whether or not students are making progress

Some thoughts: the first is that “professionalization” seems less off-putting if it occurs at post-grad rather than undergrad level. Much of this discussion, however, seems to forget that undergrads move from one type of major to a different type of post-grad track. In other words, the movement from major to career-tracks is probably getting less and less linear, but advising tends to assume a steady, uninterrupted path.

Next thought is that the kind of assessment work you’re talking about is being done, in a number of fields except for English, interestingly, in what’s being called the “Tuning Project.” I’ve been following the discussions at #AHA 2015, and they’ve had some good experiences doing what you suggest.

Try these tweeted talks and links, for a start:

http://www.historians.org/teaching-and-learning/current-projects/tuning/history-discipline-core

https://storify.com/DaveMazella/tuning-talk-at

http://sfy.co/swNj

I find it interesting that fields like economics and history and a few others have participated in these international initiatives, but nothing from literary studies that I’ve seen.